AI Strategy in 2024 for the Rest of Us

Marton Trencseni - Fri 04 October 2024 - meta

Introduction

The Generative AI space has exploded in 2023 and 2024, becoming a billion-dollar business even for young startups, and adding a trillion dollar of market cap to Nvidia's market capitalization on the Nasdaq.

After 2023, in 2024 the AI space has further expanded to new AI vendors with new funding, new foundational models and a plethora of related tooling. To keep this discussion focused, in this piece we will focus on textual AI models, which are generally seen as the most relevant (both in terms of applications and as a potential path towards AGI), and are also the most relevant for most applications.

Landscape

What are the major themes in late 2024 for textual Generative AI?

-

Numerous LLM providers.

The number of companies offering foundational models is already large and increasing. The biggest players and models are:- OpenAI:

4o, 4o-mini, o1-preview, o1-preview-mini - Google:

gemini-1.5-flash, gemini-1.5-pro, gemini-1.0-pro, aqa - Meta:

llama-3.1, llama-3 - Microsoft:

phi-3-mini, phi-3-small, phi-3-medium(small language models) - Anthropic:

claude-3.5-sonnet, claude-3-haiku, claude-3-opus - Mistral:

mistral-7b - Databricks:

dbrx - Twitter/X:

grok - UAE:

falcon-mamba-7b, falcon-2-11b, falcon-7b, falcon-40b, falcon-180b - .. and many more ..

- OpenAI:

-

Commercial and open models.

Most types of software has been commoditized over the past 30 years and there are best-in-class free or open source options in most categories, for example: Linux (server operating system), Android (mobile operating system), Chrome (browsers), Postgres (OLTP databases), and countless other examples.

In the LLM space, this evolution occurred at breakneck speed, and in fact many of the above models are open source or open weight. Open weight means that the binary version of the model can be downloaded and used as-is or further fine-tuned.

The primary example of a commercial LLM is the OpenAI offering, about which little is known, it cannot be downloaded, and it can only be accessed through pay-per-use API calls. The primary example of an open weight model is Meta'sllamaseries, which can be downloaded and used freely.

Although currently OpenAI's models are the best-in-class, the open models are only lagging about one generation, or 9-12 months behind! -

Evolution of Small and Medium Language Models.

There is a significant trend towards the development of small and medium-sized language models that require less computational power and storage. These models are becoming increasingly efficient, enabling them to run on personal devices such as laptops and smartphones. This evolution democratizes access to AI, allowing more users and applications to leverage advanced language models without the need for extensive infrastructure. Examples include lightweight versions of existing models and newly designed architectures optimized for lower resource consumption. -

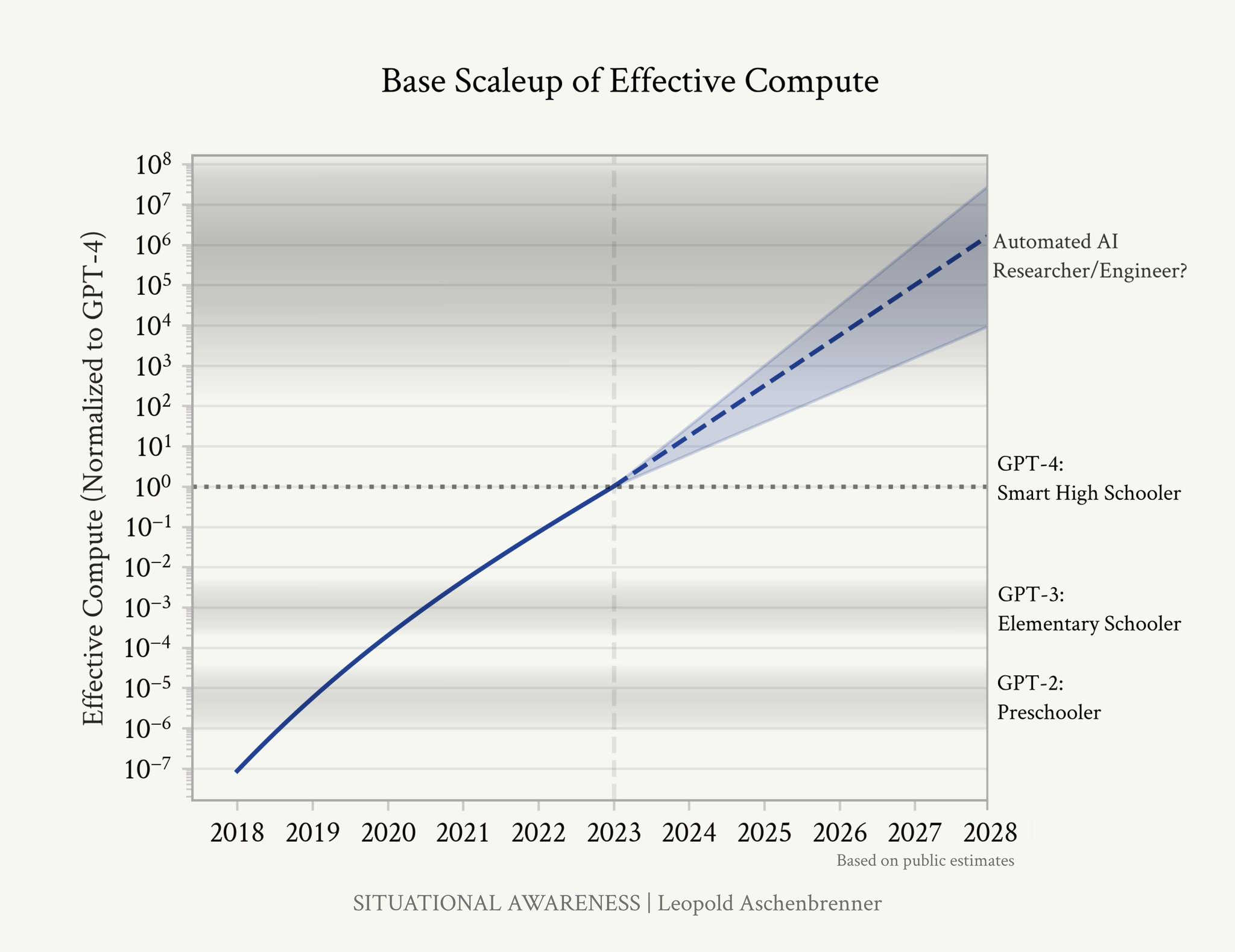

Order of Magnitude (OOM) improvements every year.

GPT-2 to GPT-4 took a preschooler-level LLM to smart-high-schooler level in 4 years. Tracing trendlines in compute (~0.5 orders of magnitude or OOMs/year), algorithmic efficiencies (~0.5 OOMs/year), and “unhobbling” gains (from chatbot to chain-of-thought to agent), we should expect another preschooler-to-high-schooler-sized qualitative jump by 2027.

See Situational Awareness by Leopold Aschenbrenner for more. -

During a goldrush, sell shovels.

Back during the 1849 California Gold Rush, few prospectors struck it rich. Instead, most of the people who made money back then were those who “sold shovels” (and jeans, tents, pickaxes and other supplies and services) to the prospectors who lived hard lives panning for gold.

Parallel to the explosion of LLMs, there is an explosion of tooling around LLMs. This includes tooling to train and serve LLMs in productions as well as vector databases. This landscape is dominated by SaaS providers and open source software, usually written in Python. Examples include: Langchain, Ollama, LlamaIndex, EmbedChain, Pinecone, Chroma, Huggingface. There are literally 100s of startups offering 100s of solutions, and 100s of open source projects all trying to gain market share. -

Large-scale investments by VC & Bigtech.

According to the World Economic Forum, a fervent gold rush in private AI investment has catalyzed transformative developments across key sectors such as autonomous vehicles, healthcare, and IT infrastructure. VC investments in AI totaled \$290 billion over the last 5 years. In the US, optimistic projections suggest that AI could boost annual GDP growth by 0.5 to 1.5% over the next decade. That's \$1.2 trillion to \$3.8 trillion in real terms. -

Increasing adoption.

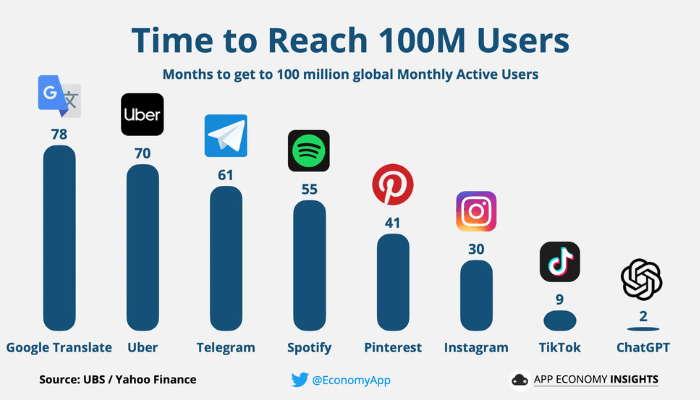

Reuters reports that ChatGPT is estimated to have reached 100 million monthly active users (MAUs) in 2023 January, just two months after launch, making it the fastest-growing consumer application in history.

Klarna's CEO, Sebastian Siemiatkowski, describes AI as a "game changer for productivity," highlighting its transformative impact on the company's operations. Klarna is one of the companies that has seen great success with AI. 90% of Klarna's staff are using AI daily, indicating a company-wide embrace of AI technologies across all departments. Significant Productivity Gains: AI tools have boosted employee productivity by 30-40%, allowing staff to focus more on meaningful and strategic work. And now, Klarna is launching new AI-driven features for customers, enhancing user experience and maintaining a competitive edge. -

Uncertain trajectory.

Despite all the investment in the space and the OOM improvements happening every year, it's unclear how far the current exponential trajectory can and will continue. Wall Street is eagerly waiting to see how OpenAI's latest models perform, and price "AI stock" accordingly. Nvidia is selling GPUs in batches of 100k to Meta, Twitter/X and other companies, which has caused its sales to triple and its market capitalization to cross $3T, surpassing Amazon, Google and Meta. But it's unclear how long this can continue, with energy requirements surging, and cloud providers now building datacenters next to power-plants.

Trajectory

Reasons to expect the AI to sustain to 2030:

- OOM improvements in AI model performance have arrived consistently for the last 5 years. This trend is not showing signs of a slowdown.

- Wall Street investors, VCs and Bigtech (Amazon, Microsoft, Google, Meta, Twitter/X) believes the AI boom will continue.

- Billions of dollars of investment is going into the space.

- Continuous improvements in hardware, like specialized AI chips, are boosting computational capabilities while reducing energy costs.

- Many (but not all) smart people believe that not just model performance will improve, but Artificial General Intelligence (AGI) can be reached on the current trajectory.

Reasons to expect a plateau earlier:

- Leading VC firm Sequoia's David Cahn famously posed AI’s $600B Question in 2024 June: “Where is all the revenue?” His point is, the companies generating Nvidia's outsized revenues (eg. Meta, Twitter/X, etc.) will have to make that money back in their own revenues — but so far there is no sign of this additional revenue appearing in these downstream companies' statements.

- Energy constraints. The escalating energy consumption required to train and operate advanced AI models may become unsustainable, potentially limiting further advancements.

- Training data is limited. Current models have already been trained on much of the public Internet, and selectively on parts of the private Internet. The scarcity of high-quality, diverse training data may hinder further advancements in model accuracy.

- Although the latest OpenAI models outperform previous generations, they no longer do so just on a brute-force parameter count basis. For example, the latest

o1-previewversion is using chain-of-thought looping to increase response quality. It's not clear how many such architectural improvements remain to be discovered. - Ethical and regulatory challenges could slow down AI development and limit its applications.

Key challenges to AI adoption

What are the key challenges faced by industry players in general in adopting AI projects, focusing on the trajectory of 2023-2024 Generative AI technologies?

-

Misalignment with customer needs.

AI projects often fail when they are not grounded in solving genuine problems faced by customers and users. Focusing on AI for its own sake, rather than addressing specific pain points, leads to solutions that lack relevance and fail to gain user adoption. -

High AI API costs.

The expense associated with using AI APIs from third-party providers, such as proprietary language models, can be prohibitively high at scale. These costs make it difficult for organizations to implement AI solutions economically, especially when the return on investment is not immediate or guaranteed. -

Lack of experimentation culture.

Some companies have rigid organizational structures and bureaucratic processes that hinder rapid experimentation and innovation. This lack of agility makes it challenging to implement AI projects that don't offer a clear and immediate return on investment (ROI), for example, due to API costs. Since AI initiatives often involve uncertainty and require iterative development, the inability to quickly test and deploy these solutions prevents organizations from realizing potential long-term benefits. -

Lack of in-house expertise.

Many companies struggle with insufficient internal knowledge in areas like machine learning, prompt engineering, and the utilization of open source and open weight models likellama. This expertise gap leads to over-reliance on external consultants or vendors, resulting in higher costs and less control over AI initiatives. -

Ethical and Regulatory Concerns.

Concerns over privacy, security, and ethical issues like data-sharing and algorithmic bias can impede AI adoption. Without clear strategies to address these challenges, organizations may hesitate to deploy AI solutions.

Strategy

Based on the above considerations, what is a good AI strategy for 2025 and beyond for a person or company not working on foundational models themselves — the vast majority I refer to as the "Rest of Us" in the title?

-

Rely on existing foundational models.

Given the high cost and complexity of developing our own generative AI models, you should utilize existing foundational models provided by established organizations. This approach allows you to leverage cutting-edge technology without the significant investment required to build models from scratch. It is a practical decision to adopt proven models that can meet our needs effectively. -

Avoid irreversible decisions.

The AI field is rapidly evolving, with new developments emerging every 6-9 months. Therefore, you should avoid making long-term commitments to specific partners or vendors. By treating these decisions as reversible ("two-way doors"), you maintain the flexibility to adapt to changes in the industry. This approach ensures you can pivot quickly if better options become available. -

Identify use-cases for best-in-class, expensive models.

You should pinpoint scenarios where utilizing top-tier, expensive AI models from OpenAI provides significant value. Such use-cases include serving VIP customers or engaging with high-net-worth property investors, and supporting internal employees. In these contexts, the superior capabilities of premium models can enhance customer experience and satisfaction. -

Use cheaper or open models elsewhere.

For functions like call center operations and online chatbots, you can employ models that are significantly less expensive or open-source alternatives, such as thellamaseries of models from Meta. These models are adequate for handling general inquiries and routine tasks. By aligning the complexity of the AI model with the requirements of the use-case, you optimize costs without compromising service quality. -

Identify areas for AI product experimentation.

You should explore opportunities for rapid experimentation with AI features. These experiments will help you gather feedback and refine your AI offerings before a wider release. -

Build a small, expert AI team.

Assemble a dedicated AI team consisting of 3-5 experts who have a deep understanding of available models and the current tooling landscape. Their expertise will drive innovation and ensure successful AI integration. -

Engineer software for model flexibility.

Your AI-based software should be designed to allow for easy integration and swapping of different AI models. By creating a modular system, you can switch between commercial and open-source models with minimal adjustments. This flexibility enables you to take advantage of advancements in AI technology without overhauling your entire infrastructure. -

Centralize in-house documentation.

Collect all internal documentation into a centralized repository to create a comprehensive knowledge base. This will enable your in-house AI applications to easily access and query accumulated knowledge. Centralization enhances efficiency and ensures that valuable information is readily available to support decision-making and AI functionalities. -

Invest in training and upskilling employees.

Implement training programs to enhance the AI skills of your existing workforce. By upskilling employees in areas like machine learning and data analysis, you can tap into their potential to contribute to AI projects. This not only builds internal expertise but also fosters a culture of innovation throughout the organization. -

Develop guidelines for AI features.

Establish clear guidelines for implementing AI features. For instance, define your policy on supporting foreign languages to cater to regional customers. Specify the appropriate tone and style that chatbots should use to align with your brand image. Additionally, outline topics or content that are off-limits to ensure compliance and maintain customer trust.

Conclusion

In the rapidly evolving AI landscape of 2024, organizations must adopt a strategic approach that leverages existing foundational models while staying adaptable to ongoing advancements. By selectively utilizing premium AI models for high-value applications and integrating cost-effective or open-source alternatives where appropriate, companies can optimize costs without sacrificing quality. Investing in internal expertise, fostering a culture of experimentation, and designing flexible, modular AI systems will position organizations to effectively navigate challenges and capitalize on future AI developments beyond 2025.