Can ChatGPT write the C++20 async message queue codes from the previous posts?

Marton Trencseni - Sat 15 April 2023 - C++

Introduction

In the previous articles I wrote a simple async message queue server in Python:

- Writing a simple C++20 async message queue server - Part I

- Writing a simple C++20 async message queue server - Part II

I went through the same steps with Python. There, I concluded with trying to get ChatGPT-3.5 to write the code:

- Writing a simple Python async message queue server - Part I

- Writing a simple Python async message queue server - Part II

- Can ChatGPT write the Python async message queue codes from the previous posts?

In the Python case, ChatGPT-3.5 was able to produce working code, but it wasn't logically correct: for example, it mixed up delivery semantics during caching.

In this post, I will try the same with C++20. This time, I will use ChatGPT-4.

First version

Prompt:

Write a simple, asynchronous, event-driven, in-memory message queue TCP/IP server in C++20 using the asio library. The server should accept the listening port as a command line argument. Cache 100 messages per topic. Here is the message format for subscribing to a topic:

{'command':'subscribe', 'topic':'foo'}

{'command':'subscribe', 'topic':'foo', 'last_seen': '5'}

If last_seen is specified, only replay cached messages with higher id. Sent messages look like this:

{'command':'send', 'topic':'foo', 'msg':'hello', 'delivery':'all'}

{'command':'send', 'topic':'foo', 'msg':'world', 'delivery':'one'}

When the message is forwarded to subscribers, also insert an id field into the message, so subsciber clients can maintain their own last_seen.

ChatGPT's code:

#include <asio.hpp>

#include <iostream>

#include <unordered_map>

#include <vector>

#include <deque>

#include <string>

#include <nlohmann/json.hpp>

using asio::ip::tcp;

using json = nlohmann::json;

struct Subscriber {

tcp::socket socket;

std::string last_seen;

Subscriber(asio::io_context& io_context)

: socket(io_context) {}

};

class MessageQueueServer {

public:

MessageQueueServer(asio::io_context& io_context, int port)

: acceptor_(io_context, tcp::endpoint(tcp::v4(), port)) {

do_accept();

}

private:

void do_accept() {

acceptor_.async_accept(

[this](std::error_code ec, tcp::socket socket) {

if (!ec) {

auto subscriber = std::make_shared<Subscriber>(std::move(socket));

read_command(subscriber);

}

do_accept();

});

}

void read_command(std::shared_ptr<Subscriber> subscriber) {

asio::async_read_until(subscriber->socket, asio::dynamic_buffer(buffer_),

'\n',

[this, subscriber](std::error_code ec, std::size_t) {

if (!ec) {

std::istream is(&buffer_);

json command;

is >> command;

buffer_.consume(buffer_.size());

handle_command(subscriber, command);

read_command(subscriber);

}

});

}

void handle_command(std::shared_ptr<Subscriber> subscriber, const json& command) {

std::string cmd = command["command"];

std::string topic = command["topic"];

if (cmd == "subscribe") {

subscribers_[topic].push_back(subscriber);

if (command.contains("last_seen")) {

subscriber->last_seen = command["last_seen"];

}

replay_messages(subscriber, topic);

} else if (cmd == "send") {

std::string msg = command["msg"];

std::string delivery = command["delivery"];

cache_message(topic, command);

if (delivery == "all") {

for (const auto& sub : subscribers_[topic]) {

send_message(sub, command);

}

} else if (delivery == "one") {

if (!subscribers_[topic].empty()) {

send_message(subscribers_[topic].front(), command);

}

}

}

}

void cache_message(const std::string& topic, const json& command) {

auto& messages = message_cache_[topic];

json cached_msg = command;

cached_msg["id"] = next_message_id_++;

messages.push_back(cached_msg);

if (messages.size() > max_messages_per_topic_) {

messages.pop_front();

}

}

void replay_messages(std::shared_ptr<Subscriber> subscriber,

const std::string& topic) {

for (const auto& msg : message_cache_[topic]) {

if (subscriber->last_seen.empty() || msg["id"] > subscriber->last_seen) {

send_message(subscriber, msg);

}

}

}

void send_message(std::shared_ptr<Subscriber> subscriber, const json& message) {

std::string msg = message.dump() + "\n";

asio::async_write(subscriber->socket, asio::buffer(msg),

[](std::error_code, std::size_t) {});

}

private:

tcp::acceptor acceptor_;

std::unordered_map<std::string, std::vector<std::shared_ptr<Subscriber>>> subscribers_;

std::unordered_map<std::string, std::deque<json>> message_cache_;

std::size_t next_message_id_ = 1;

const std::size_t max_messages_per_topic_ = 100;

asio::streambuf buffer_;

};

int main(int argc, char* argv[]) {

if (argc != 2) {

std::cerr << "Usage: message_queue_server <port>\n";

return 1;

}

int port = std::stoi(argv[1]);

try {

asio::io_context io_context;

MessageQueueServer server(io_context, port);

io_context.run();

} catch (std::exception& e) {

std::cerr << "Exception: " << e.what() << "\n";

}

return 0;

}

Compiling ChatGPT's code

The code does not compile, so I asked ChatGPT for help:

I'm trying to compile. In read_command(), I get this compile-time error:

no instance of overloaded function "asio::dynamic_buffer" matches the argument list

ChatGPT gives me a new version:

I apologize for the confusion. To resolve this error, you should change the asio::dynamic_buffer function call to asio::dynamic_buffer(buffer_.data()). Here's the updated read_command() function:

void read_command(std::shared_ptr<Subscriber> subscriber) {

asio::async_read_until(subscriber->socket, asio::dynamic_buffer(buffer_.data()),

'\n',

[this, subscriber](std::error_code ec, std::size_t) {

if (!ec) {

std::istream is(&buffer_);

json command;

is >> command;

buffer_.consume(buffer_.size());

handle_command(subscriber, command);

read_command(subscriber);

}

});

}

This still does not compile, I ask again:

I apologize for the confusion earlier. I made a mistake in the suggestion. We should use asio::streambuf instead of asio::dynamic_buffer. Here's the updated read_command() function:

void read_command(std::shared_ptr<Subscriber> subscriber) {

asio::async_read_until(subscriber->socket, buffer_, '\n',

[this, subscriber](std::error_code ec, std::size_t) {

if (!ec) {

std::istream is(&buffer_);

json command;

is >> command;

buffer_.consume(buffer_.size());

handle_command(subscriber, command);

read_command(subscriber);

}

});

}

Now I get this:

'Subscriber::Subscriber': no overloaded function could convert all the argument types

ChatGPT again gives me new code:

struct Subscriber {

tcp::socket socket;

std::string last_seen;

explicit Subscriber(tcp::socket&& socket)

: socket(std::move(socket)) {}

};

// ...

class MessageQueueServer {

public:

MessageQueueServer(asio::io_context& io_context, int port)

: acceptor_(io_context, tcp::endpoint(tcp::v4(), port)) {

do_accept();

}

// ...

Putting everything together, the code now compiles! I put json.hpp next to the .cpp file from the json C++ library and change that one #include line to:

#include "json.hpp"

The fact that ChatGPT was able to guide me to through these compile time errors to get a program that compiles is quite impressive. Looking at the code, there are some obvious problems, but let's try to run it anyway. It used JSON, which means double-quotes " for the messages rather than single quotes ' like I specified in the prompts, but let's ignore that.

Running ChatGPT's code

After compiling the code, I ran it, and connected 2 clients. The first subscribes:

{"command":"subscribe", "topic":"foo"}

A second client sends a message:

{"command":"send", "topic":"foo", "msg":"0", "delivery":"one"}

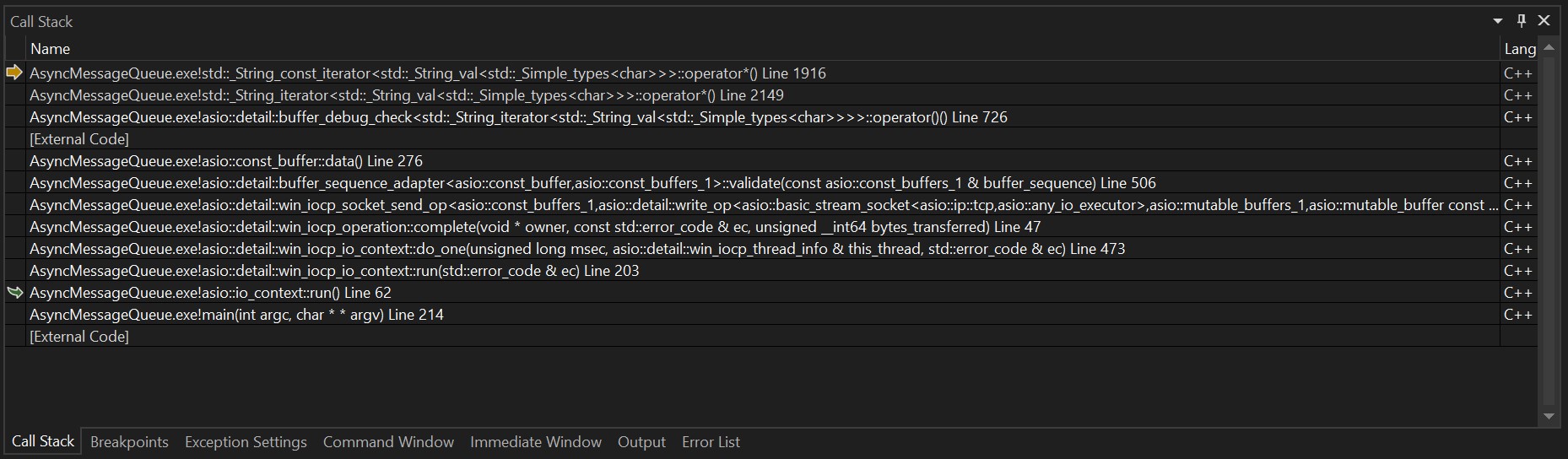

The message is delivered, and the server crashes immediately. There is none of our own code on the stack trace, the program is doing an async send() operation:

I tell ChatGPT what happened, it responds:

The issue might be related to the lifetime of the buffer_. Since buffer_ is a member variable of the MessageQueueServer class, it's shared between all connected clients. The ideal approach would be to have a separate buffer for each client. We can achieve this by adding a buffer to the Subscriber struct. Here's the updated Subscriber struct and the corresponding changes in the MessageQueueServer class:

struct Subscriber {

tcp::socket socket;

asio::streambuf buffer;

std::string last_seen;

explicit Subscriber(tcp::socket&& socket)

: socket(std::move(socket)) {}

};

// ...

class MessageQueueServer {

public:

MessageQueueServer(asio::io_context& io_context, int port)

: acceptor_(io_context, tcp::endpoint(tcp::v4(), port)) {

do_accept();

}

// ...

void read_command(std::shared_ptr<Subscriber> subscriber) {

asio::async_read_until(subscriber->socket, subscriber->buffer, '\n',

[this, subscriber](std::error_code ec, std::size_t) {

if (!ec) {

std::istream is(&subscriber->buffer);

json command;

is >> command;

subscriber->buffer.consume(subscriber->buffer.size());

handle_command(subscriber, command);

read_command(subscriber);

}

});

}

The good news is, the program still compiles with these changes. Unfortunately, it still crashes. The final ChatGPT .cpp file is here.

Logical bugs

Putting aside the buffer management bug which is causing the server to crash, there are other problems as well:

- in the example, I used single quotes

', not double-quotes", which is not valid JSON; in my own implementation in the previous posts, I avoided including a large library by implementing (with the help of ChatGPT) a ~10 line parser function for flat string dictionaries - caching and

deliveryis buggy: inhandle_command(), it callscache_message()irrespective of delivery semantics. So if a message hasdelivery=one, it can get delivered to a single recipient now, but also later from the cache - in

replay_messages()it does not implementdeliverysemantics at all - only cached messages receive an

idfield, messages sent as they are received do not contain this field; this also means clients that receive non-cached messages cannot maintain theirlast_seencounters - the

last_seenfield is stored as anstd::string, and is then compared to theidlexicographically (instead of asints) - there are probably numerous other bugs, including memory management issues that would cause further crashes...

Conclusion

Overall I think it's quite impressive that ChatGPT-4 can write this approximate solution. In C++ it's much harder to get a correct program (due to memory management, types, compiling), and this shows. But other than language issues, as described above, ChatGPT also produced numerous logical bugs. Overall, the code is like what a student of C++ would produce with lots of help from Stackoverflow and Google: along the right lines, but with lots of problems. Since this was a just a ~100 line toy problem, and it couldn't handle it, C++ programmers do not (yet) need to fear for their jobs!