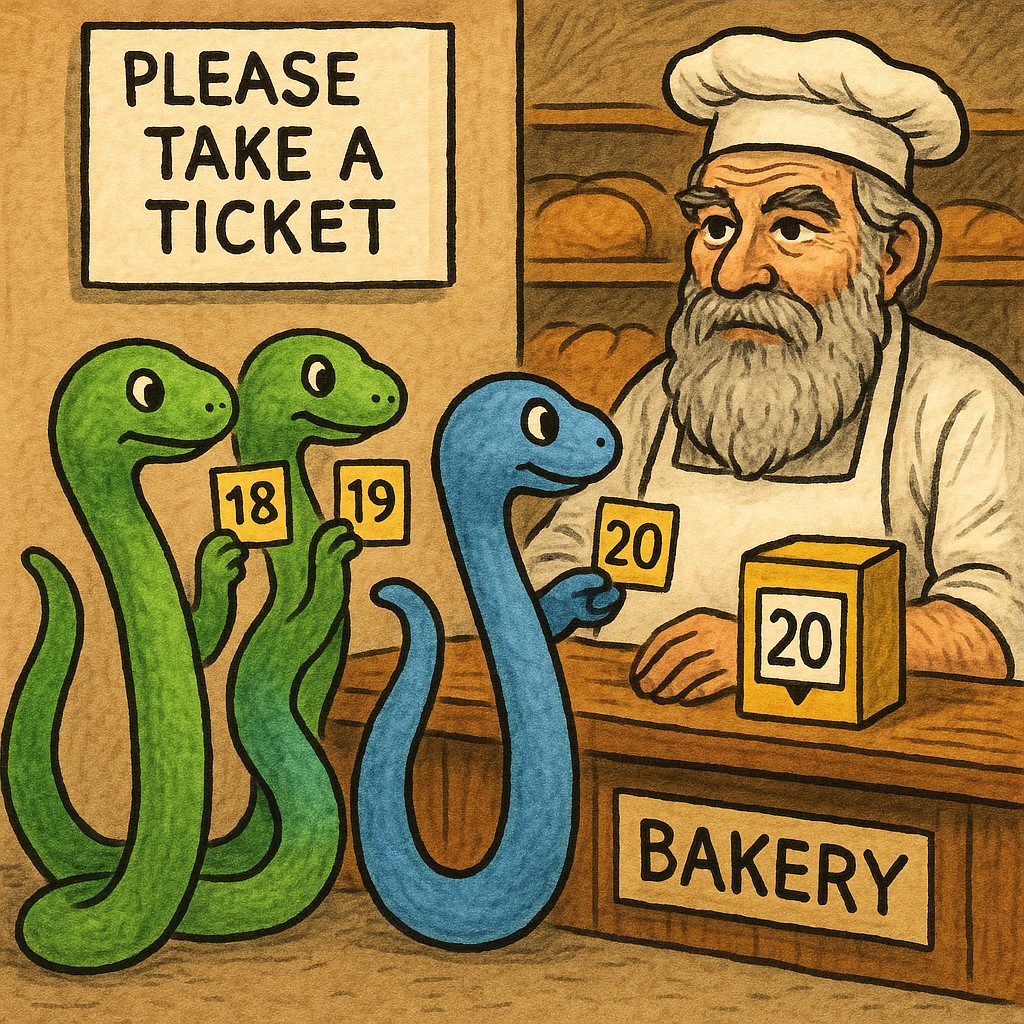

Exploring Lamport's Bakery algorithm in Python

Marton Trencseni - Fri 11 July 2025 - Programming

Introduction

In the previous article, I explored Lamport's Bakery algorithm and gave a C++20 implementation using std::atomic<>. In this follow-up, I will implement the Bakery algorithm in Python, using Flask HTTP servers. The code is on Github.

Bakery with Flask HTTP servers

As before, the critical section of each worker increments a shared counter. Correctness is observed by comparing the final count to the expected count of num_workers * num_loops. With the Flask HTTP servers the demonstration is split into 3 parts:

- Increment server: a HTTP server that just stores a single integer. For the purposes of this demonstration, there is no

/increment; instead each worker has to call/getto retrieve the current value and then/setto store the incremented value in their critical sections. Correctness assumes mutual exclusion, so that no two workers/getand/setthe same value in interleaving calls. - Workers: a HTTP server that implements the bakery logic. Each server exposes their read-only

/choosingand/ticketto the other workers, and calls the increment server in their critical section. - Driver: a simple Python program that first creates the increment server and N workers, kicks off the workers, and then compares the final count in the increment server to the expected count.

Increment server

The increment server is a trivial piece of Flask code:

from flask import Flask, jsonify, request, abort

app = Flask(__name__)

counter = 0

@app.route("/get", methods=["GET"])

def get():

return jsonify(value=counter)

@app.route("/set", methods=["POST"])

def set_value():

global counter

if not (data := request.get_json()) or "value" not in data:

abort(400)

counter = int(data["value"])

return jsonify(ok=True)

if __name__ == "__main__":

app.run(port=7000, threaded=False)

Worker

The only non-trivial portion of this demo is the worker. Similar to the C++ implementation, I factored the Bakery algorithm into a lock() and unlock() function, and lock() is further factored into announce_intent() and wait_acquire(). Below I show the more interesting parts of the code only:

import sys, time, threading, requests, logging

from flask import Flask, jsonify, request

num_loops = int(sys.argv[1]) # e.g. 1_000

my_id = int(sys.argv[2]) # 1 .. n

num_workers = int(sys.argv[3]) # e.g. 8

app = Flask(__name__)

choosing = 0

ticket = 0

done = False

@app.route("/choosing")

def endpoint_choosing():

return jsonify(choosing=choosing)

@app.route("/ticket")

def endpoint_ticket():

return jsonify(ticket=ticket)

...

def announce_intent():

global choosing, ticket

choosing = 1

# pick ticket = 1 + max(number)

ticket = 1 + max(worker_ticket(i) for i in range(1, num_workers+1))

choosing = 0

def wait_acquire():

for j in range(1, num_workers+1):

if j == my_id:

continue

while worker_choose(j):

time.sleep(0.001)

while True:

tj = worker_ticket(j)

if tj == 0 or (tj, j) > (ticket, my_id):

break

time.sleep(0.001)

def lock():

announce_intent()

wait_acquire()

def unlock():

global ticket

ticket = 0

def critical_section():

curr = requests.get(f"http://127.0.0.1:7000/get").json()["value"] # get

requests.post(f"http://127.0.0.1:7000/set", json={"value": curr + 1}) # set

def run_worker():

global done

for i in range(num_loops):

lock()

critical_section()

unlock()

#print(f"Worker {my_id} at {i}")

done = True

print(f"Worker {my_id} Done.", flush=True)

if __name__ == "__main__":

app.run(port=7000+my_id, threaded=False)

Driver

The driver is relatively straighforward:

- Start the increment server.

- Start N workers. Once the workers start looping, they call each other's

/choosingand/ticket, so we have to make sure all of them are started up before starting the loops. - Wait a second to make sure all workers are up.

- Trigger all N workers to start their loops by calling their

/startendpoint. - Repeatedly check all N workers'

/statusendpoint and wait until they all have finished looping. - Retrieve the final count from the increment server and compare to the expected count.

The code:

import subprocess, requests, time, sys, logging

from multiprocessing import Process

...

def wait_done(i):

url = f"http://127.0.0.1:{7000+i}/status"

while not requests.get(url).json().get("done"):

time.sleep(0.1)

def main():

# start increment server

procs = [spawn("inc_server.py")]

# start workers

for i in range(1, num_workers+1):

procs.append(spawn("worker.py", num_loops, i, num_workers))

# allow ports to open

time.sleep(1)

# start workers

for i in range(1, num_workers+1):

requests.post(f"http://127.0.0.1:{7000+i}/start")

# wait until all workers have done their part

for i in range(1, num_workers+1):

wait_done(i)

# check results

expected = num_workers * num_loops

observed = requests.get("http://127.0.0.1:7000/get").json()["value"]

print(f"Workers: {num_workers}")

print(f"Iterations: {num_loops}")

print(f"Expected: {expected}")

print(f"Observed: {observed}")

print(f"Passed!" if expected == observed else "FAILED!")

# clean up

for p in procs:

p.terminate()

for p in procs:

p.wait()

if __name__ == "__main__":

main()

Running the demonstration

First, let's comment out the lock() and unlock() in the workers to disable our mutual exclusion guarantee. Due to the high degree of interleaving with this HTTP implementation, even at low num_loops, we will observe how mutual exclusion breaks without the Bakery algorithm. Let's run driver.py with argument 8 100 for 8 workers and 100 loops each.

$ time python3 driver.py 8 100

...

Workers: 8

Iterations: 100

Expected: 800

Observed: 165

FAILED!

real 0m1.981s

user 0m5.159s

sys 0m0.581s

With mutual exclusion, notice it's 8x slower, because each increment happens serially due to mutual exclusion:

$ time python3 driver.py 8 100

...

Workers: 8

Iterations: 100

Expected: 800

Observed: 800

Passed!

real 0m16.431s

user 1m0.274s

sys 0m7.147s

Conclusion

Implementing the Bakery algorithm in Python was fun; to my surprise the Python implementation, even with the overhead of Flask, is a cleaner demonstration of the algorithm than the C++ version.