The Full Stack Data Scientist

Marton Trencseni - Fri 23 July 2021 - Data

Introduction

What is a full-stack data scientist? I would argue it's a data scientist who can sustainably achieve bottom-line impact, without blocking on external help from other roles [1]. Having said that, in many situations external help from other roles is available in the form of tools, platforms or actual help, and it's perfectly fine to rely on that. But, in other situations, such as an early startup environment, or when working for a company with no established technology culture, help is not always available.

The term Data Science covers a lot of ground. Being a Data Scientist is very different in a 10 person startup versus a 100,000 person company like Google or Facebook. What I write here is based on my personal experience at small to medium sized San Francisco style startups, big tech companies like Facebook, and non-tech companies in the delivery and retail space.

In terms of tooling, fortunately there is a bewildering amount of open source and Saas offerings for Data Science and Machine Learning. No single individual can learn all the tools, that is not what "full-stack" means. I believe the right approach is judgmental vertical cutting: using your experience, judgement and taste, we have to pick a vertical slice of tooling (eg. Scala or Python, AWS or Azure, etc), stop thinking about the other 100 options [2], and get good at the tools we picked.

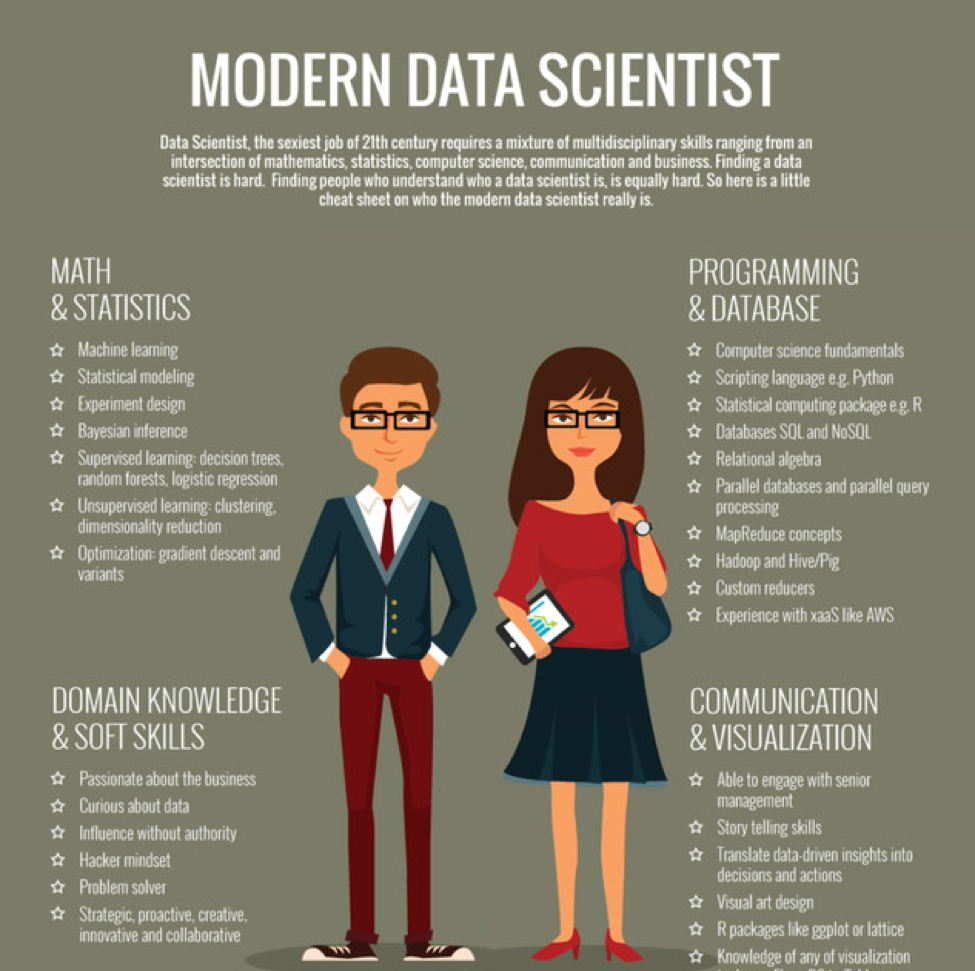

What are the core skills a data scientist needs to sustainably achieve bottom-line impact, without blocking on external help from other roles?

Core skills

Reading and learning. I put this first because it is a building block for the rest. Because the field is moving so fast, reading and learning is a core skill. If we also want to keep up-to-date with latest results in neural networks or reinforcement learning, we also need to be able to scan academic articles to extract relevant information [3].

Product sense. (or business sense) We need to understand the product or business, both qualitatively and quantitatively (metrics), so we can formulate ideas/hypothesis/experiments on how to improve them, eg. get more engagement, more active users, sell more tickets. Without this, however good technically, we won't know what's worth working on.

SQL. Hopefully your data already lives in an SQL database (a "data warehouse"). If not, first order of business is moving it there. In my experience, we need to spend a significant amount of our time deep-diving data in SQL, so we understands what datasets we have, data quality, delays and landing times, what the core metrics values are, etc. Also, it's a good practice to feed back results from our own work (A/B tests, tabular ML outputs) into the database, then it's easy to work on it (SQL is better than awk or pandas), share it with others, put dashboards on top.

Dashboarding. To understand data, we have to visualize it. It's just worth it to do this on dashboards, that way the visualization automatically updates when the underlying data changes. This makes sense even if we're the only ones looking at the dashboard (eg. a model perfomance dashboard).

ETL. ETL is just techno speak for "data job automation". The point is, there are some jobs, involving data, which have to be triggered once an hour or day or week. Examples:

- Usually, when we're visualizing data, we're not working off the raw tables, but make smaller, derived summary tables. These have to be continually re-computed.

- Machine Learning models have to be re-trained and re-deployed, usually daily or weekly.

Setting up and running an ETL scheduler like Airflow is quick and easy, it's just worth it. At tech companies, this is one of the jobs of data engineers.

Modeling. This is what people usually think about when they think Data Science: building random forests, timeseries forecasting models, training neural networks for image recognition, etc. But I suspect that for most of us, they only spend 10-20% of their time doing modeling. At big companies, there are lots of different roles (infra engineers, data engineers, ML engineers, analysts, data scientists), so each role ends up more focused on their own turf, and can safely assume the other roles will do ther part. But most companies are not big tech companies, so we have to be much more full-stack and be able to step outside of just modeling and statistics.

Experimentation and A/B testing. Experimentation is a core activity of Data Science. Whether we're running an A/B test with a product team to determine which version of a signup funnel works best, or comparing a new version of a model to the current one in production. A/B testing is a beautiful topic with unexpected depth, check out the many articles on this blog on A/B testing.

Math, probability theory, statistics. Without this, we can not make sense of the inputs and outputs of our models, run A/B tests. It's painful when people make mistakes and don't notice that correlation should be between -1 and 1, a z score of 100 is suspect, as is a MAPE of 0.1%, confuse accuracy and AUC, or don't know what a p-value means.

Programming and systems. In my experience, a full stack Data Scientist needs to have a strong software engineering background. Eg. Python is necessary to do basic modeling work in notebooks, but also to productionalize models later. Some companies will have dedicated people for productionalizing, but not at smaller shops. We have to be able to install packages and use tools such as git and Github. Also, in our daily work we inevitably hit on issues such as exceptions, out-of-memory issues. We have to be able to find and examine logs if our production model crashes.

Productionalizing, MLOps. To sustainably achieve impact, at a high velocity, we need to build an infrastructure that allows developing, deploying, training and hyperparameter tuning (possibly with AutoML) monitoring, experimenting and logging of models. MLOps is a relatively new field (outside of big tech), there are lots of options, so it's especially important to be judicious and apply taste.

Communication. Whether working with a product team or supporting other teams with analytics, insights, experimentation and decision science, we need to be credible and communicate in a clear and concise way [4].

Conclusion

In my experience, in Data Science it's best to have a software engineering background combined with a quantitative background like mathematics, physics or economics [5]. That, combined with curiousity, and never saying "that's not my job", over an extended period of time, is the way to success in Data Science.

-

Footnotes:

[1] Google, Stackoverflow, etc is fine of course...

[2] I will give an example what the right amount of judgmental is. If we're currently using X, and somebody comes along and shows us that Y is 10x faster or accomplishes the same thing in 10x less code, then we should switch to Y and thank the person for having taught us something. But in general, getting bogged down in "why are we using X and not Y" type discussions is a waste of time, because there are just too many Ys. If X works reasonably well, the burder of proof is on the other side to show a 10x improvement. The 10x rule I stole from the database engineering community, where it's common wisdom that if a challenger database technology wants to displace the current king, it needs to be 10x better in some relevant dimension. Otherwise, people just won't go through the trouble of replacing their core database systems.

[3] Academic articles are not read "cover to cover", especially not by practicioners. To get an article published, academics are forced to surround a core result or argument with 80% fluff. A good rule of thumb is to read the abstract, the introduction, then skip sections where they talk about previous work, and look at the core result, usually in section 3-5. Benchmarks can usually also be safely skipped, as can the conclusion, which is the same as the abstact and introduction.

[4] I don't believe in dumbing down analytics and data science and too much "storytelling". Partner roles in 2021 need to be analytics savvy.

[5] If I had to pick one, I would pick Computer Science. As part of a good computer science degree, we learn all the probability and statistics that's necessary.