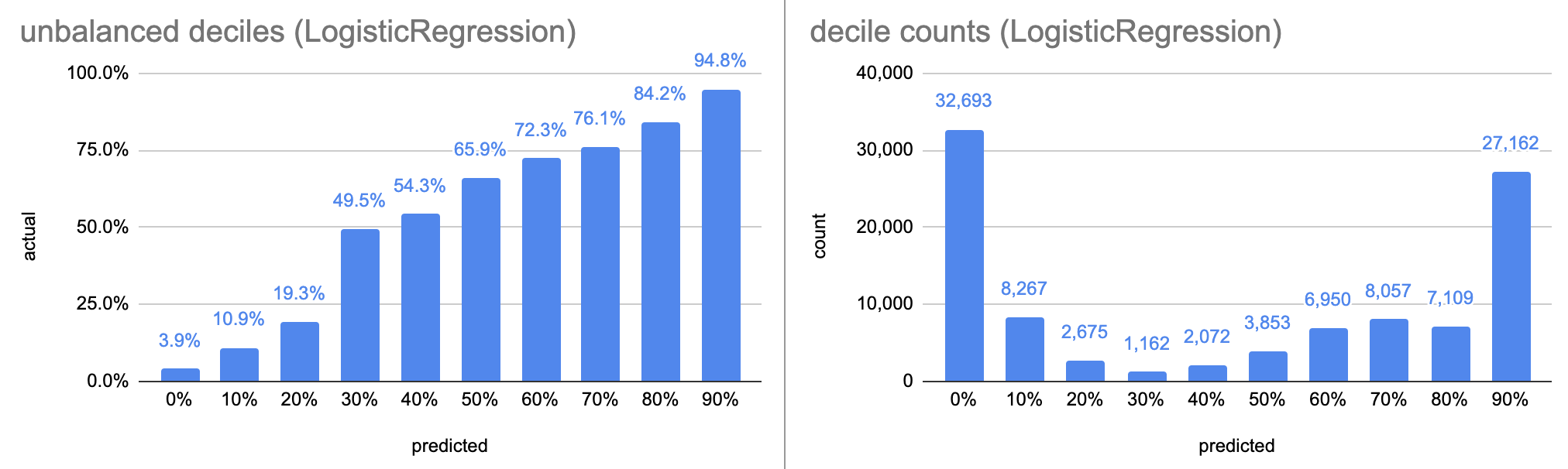

Calibration curves for delivery prediction with Scikit-Learn

Marton Trencseni - Thu 21 November 2019 • Tagged with machine, learning, fetchr, skl, calibration

I show calibration curves for four different binary classification Scikit-Learn models we built for delivery prediction at Fetchr, trained using real-world data: LogisticRegression, DecisionTree, RandomForest and GradientBoosting.