Bayesian A/B conversion tests

Marton Trencseni - Tue 31 March 2020 • Tagged with bayesian, ab-testing

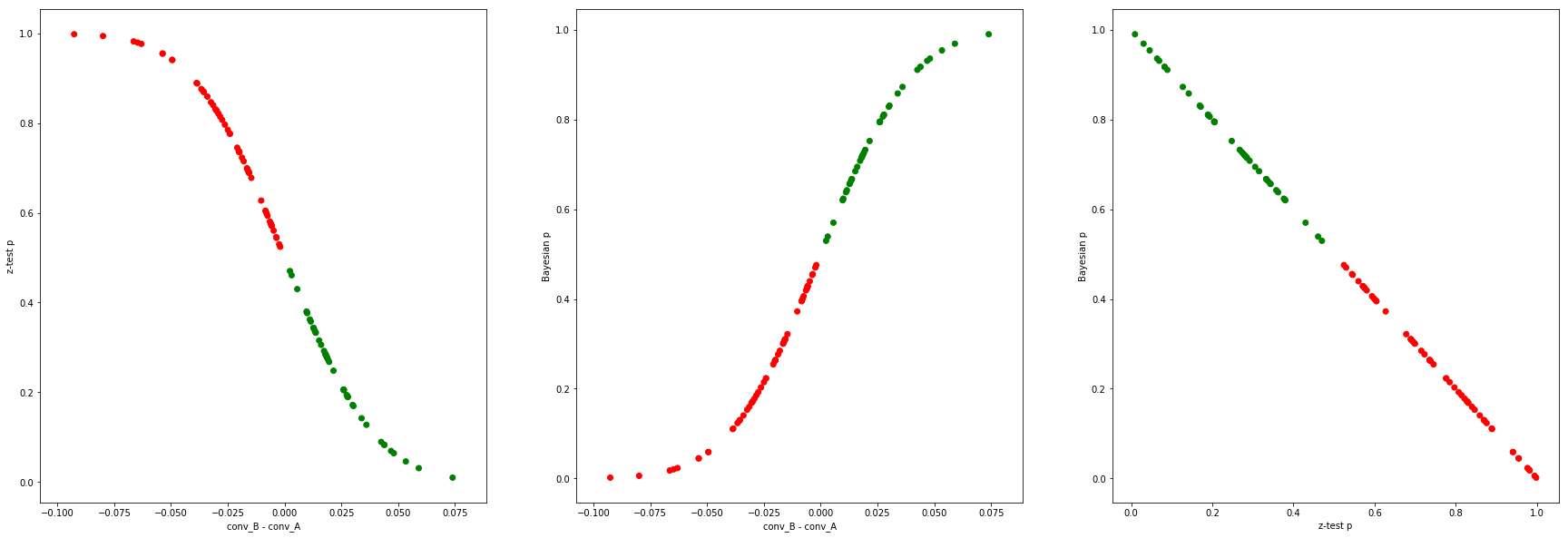

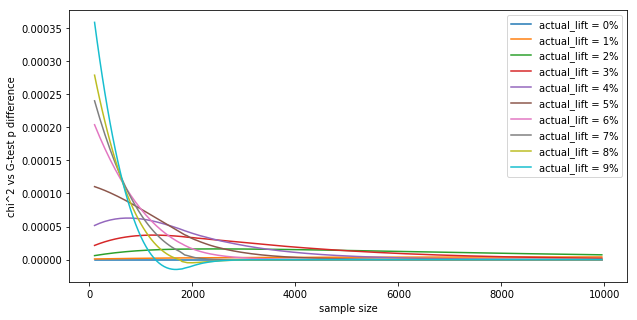

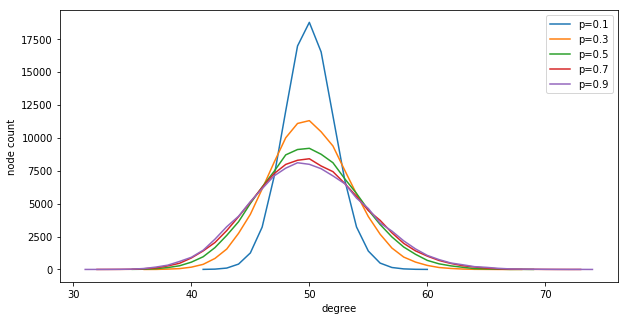

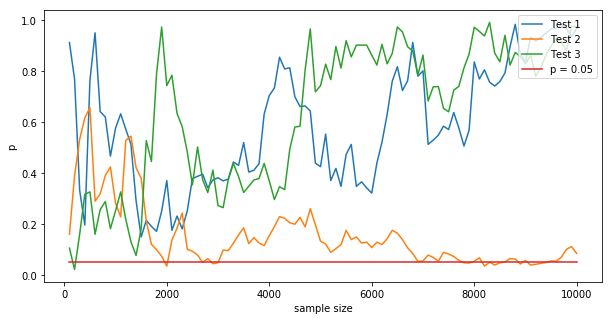

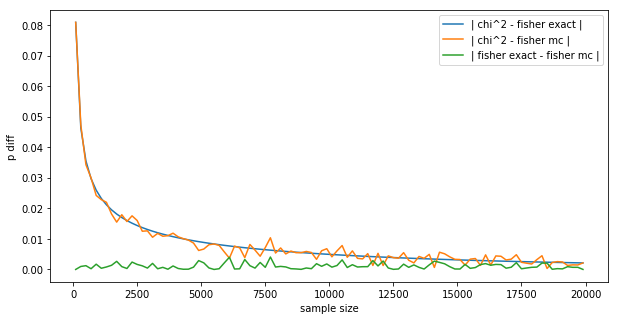

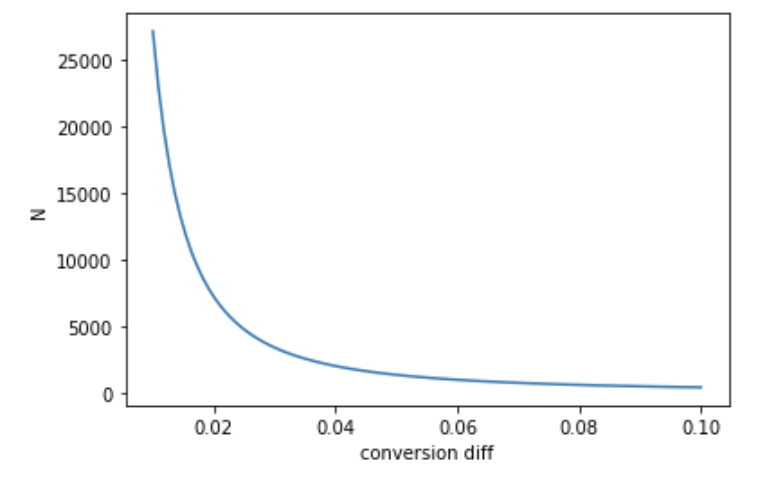

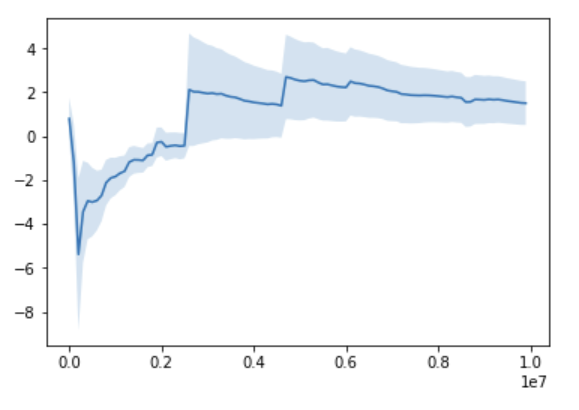

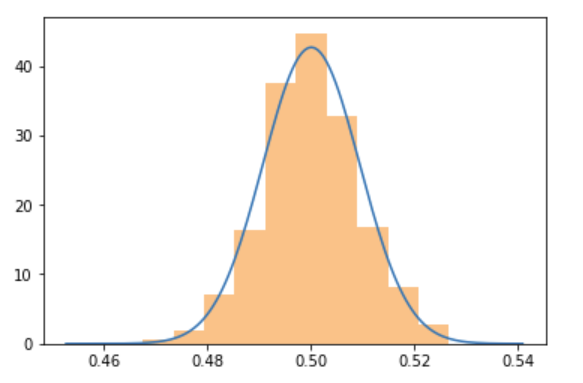

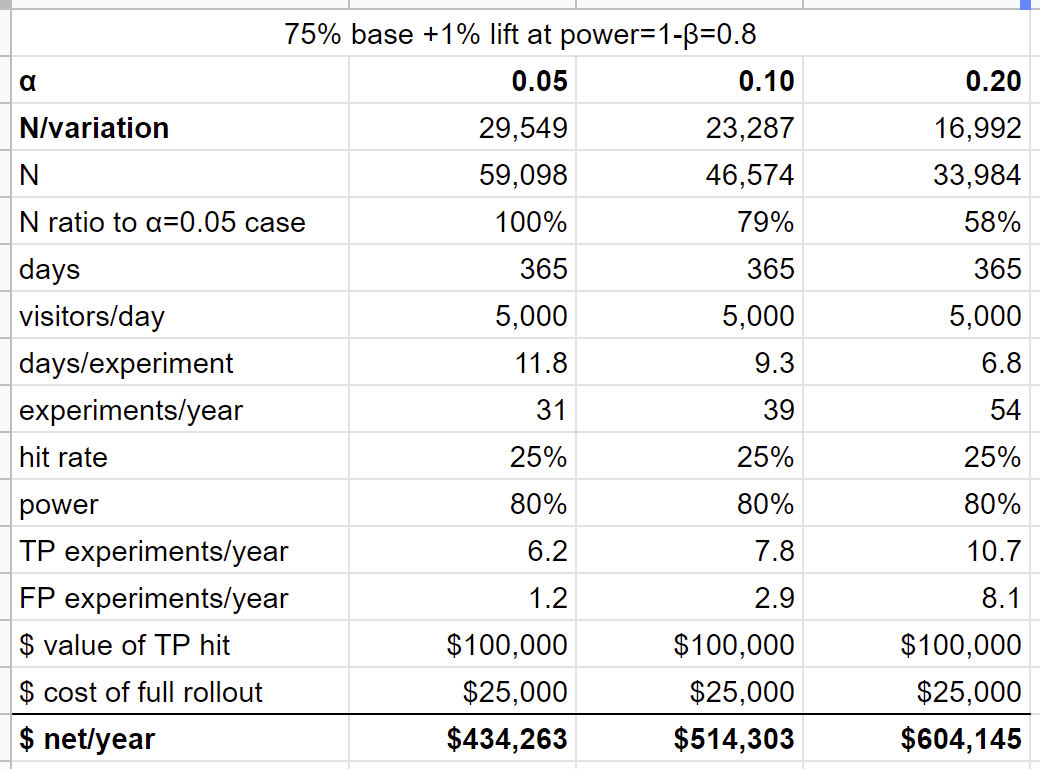

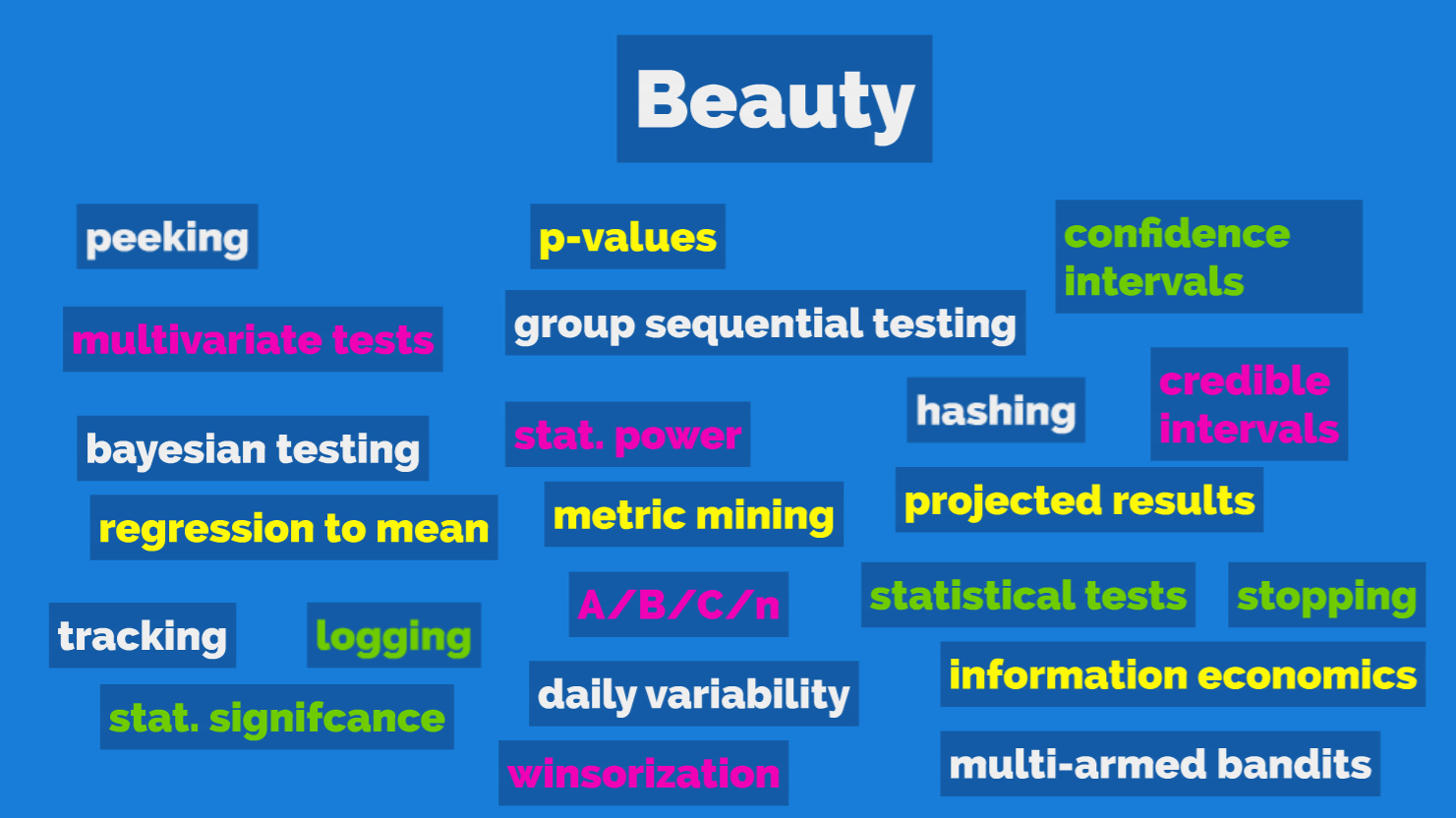

I compare probabilities from Bayesian A/B testing with Beta distributions to frequentist A/B tests using Monte Carlo simulations. Under a lot of circumstances, the bayesian probability of the action hypothesis being true and the frequentist p value are complementary.