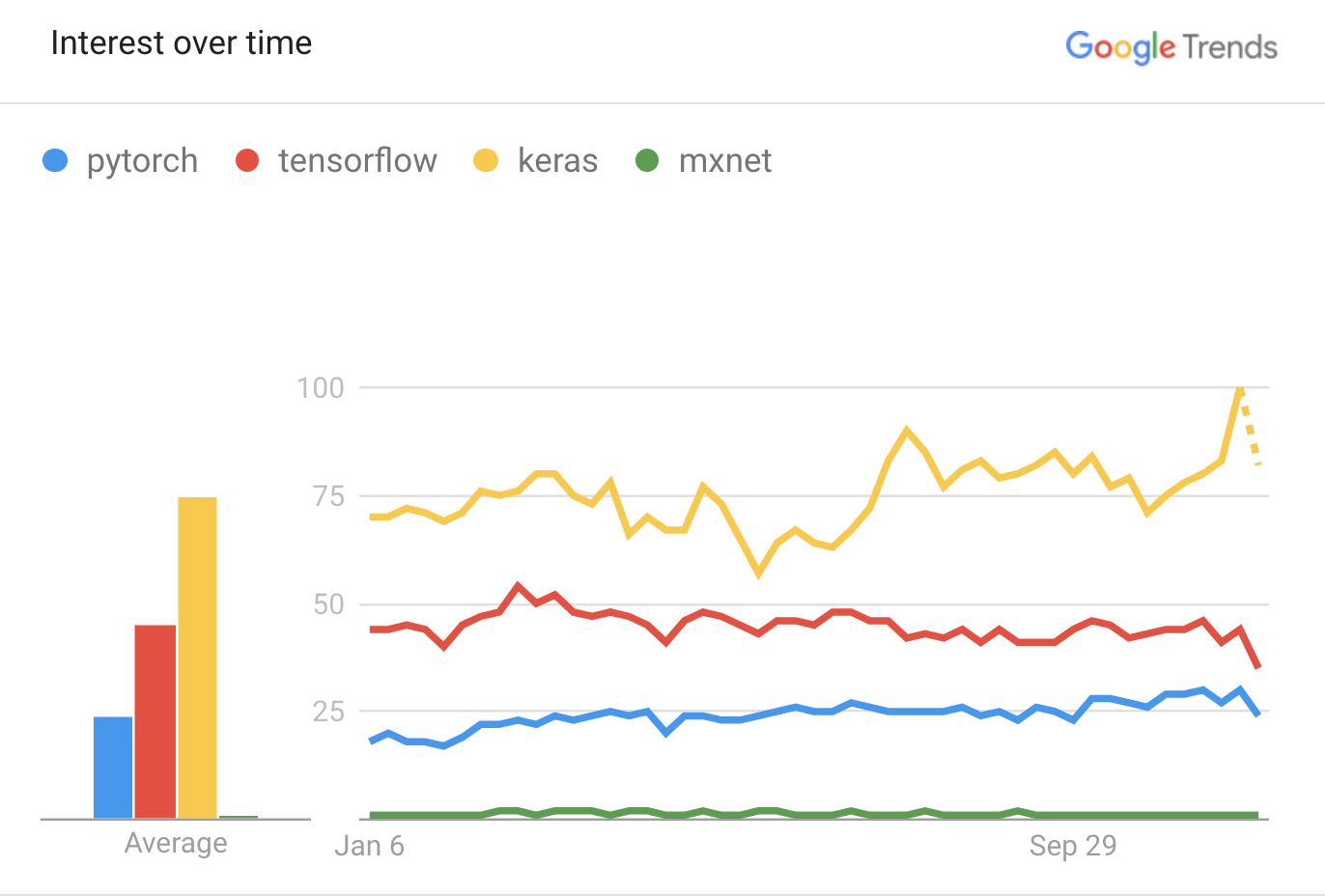

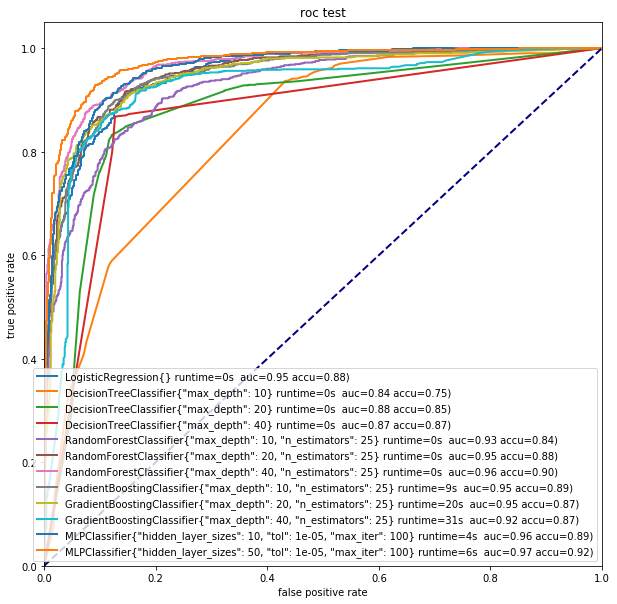

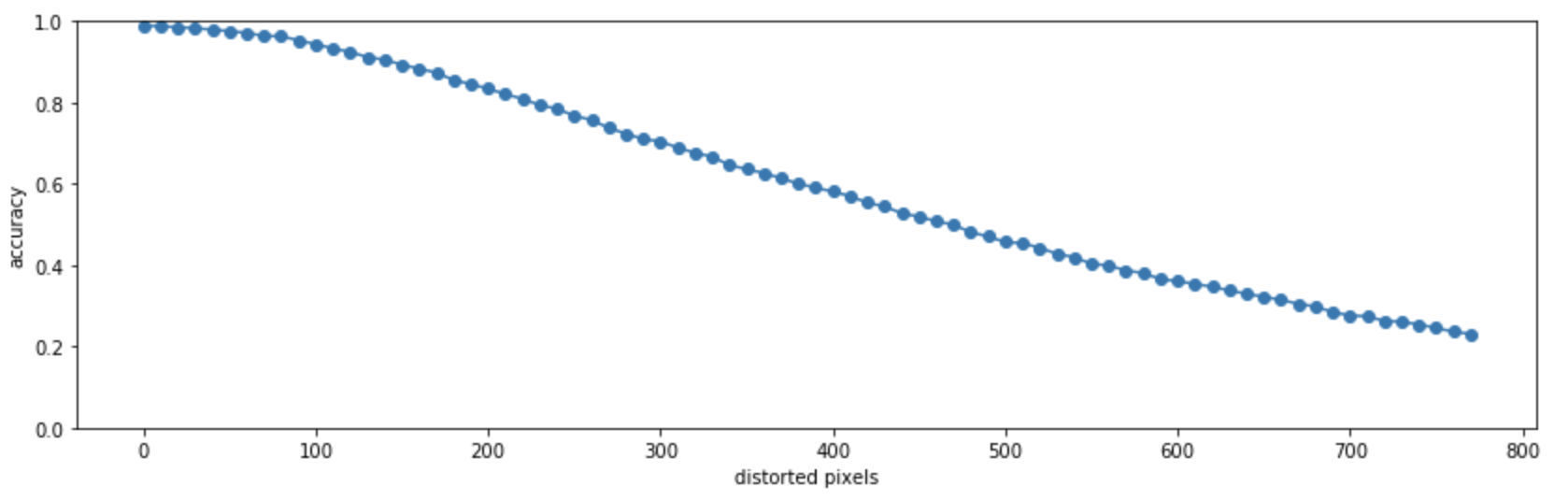

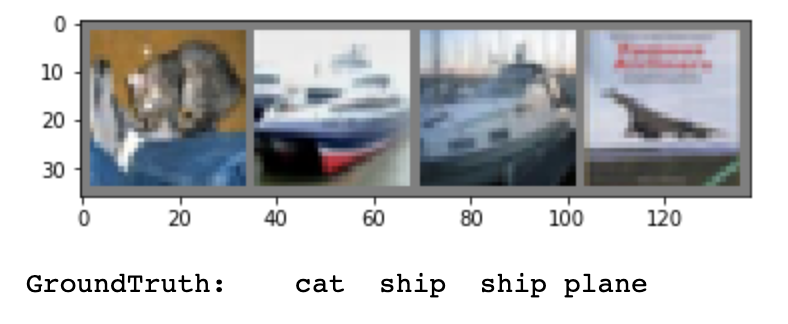

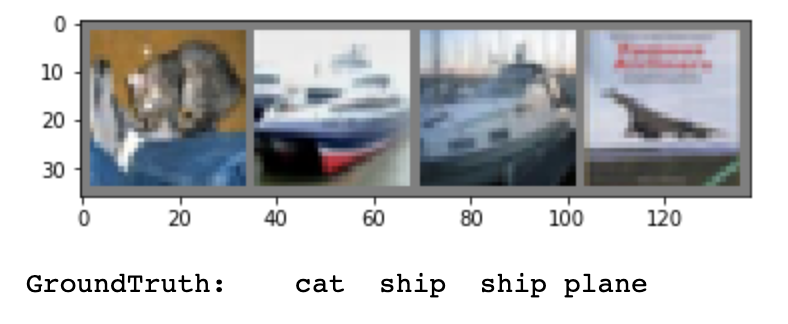

CIFAR-10 is a classic image recognition problem, consisting of 60,000 32x32 pixel RGB images (50,000 for training and 10,000 for testing) in 10 categories: plane, car, bird, cat, deer, dog, frog, horse, ship, truck. Convolutional Neural Networks (CNN) do really well on CIFAR-10, achieving 99%+ accuracy. The Pytorch distribution includes an example CNN for solving CIFAR-10, at 45% accuracy. I will use that and merge it with a Tensorflow example implementation to achieve 75%. We use torchvision to avoid downloading and data wrangling the datasets. Like in the MNIST example, I use Scikit-Learn to calculate goodness metrics and plots.

Continue reading