Similar posts recommendation with Doc2Vec - Part I

Marton Trencseni - Sat 03 December 2022 • Tagged with similarity, python, gensim, word2vec, doc2vec, pyml

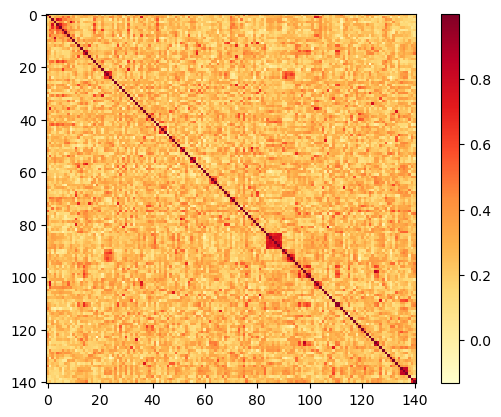

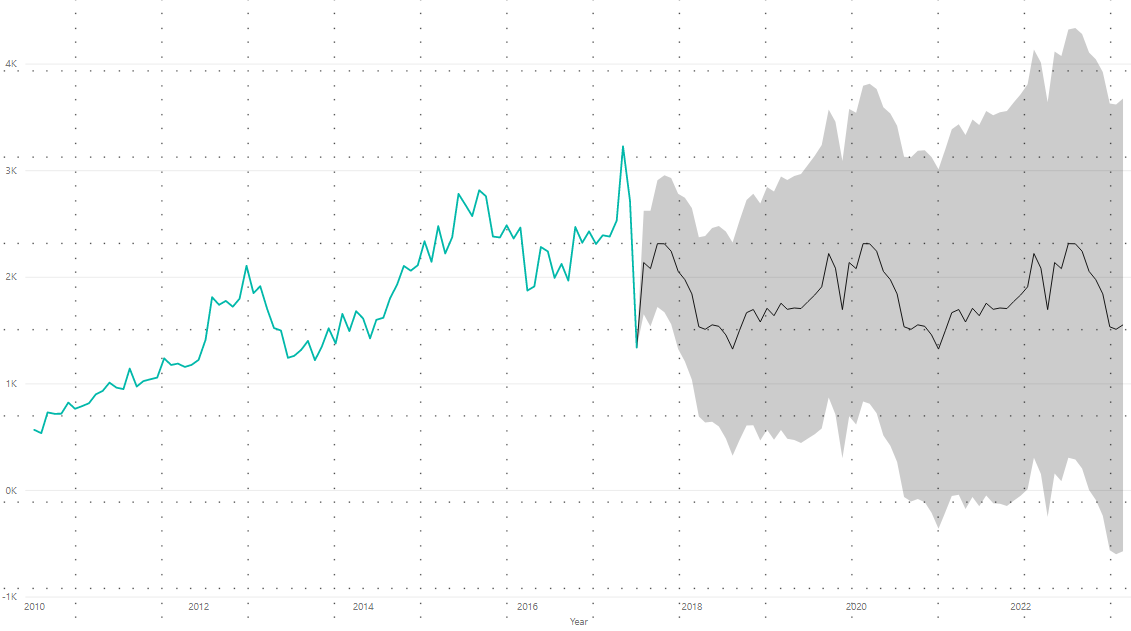

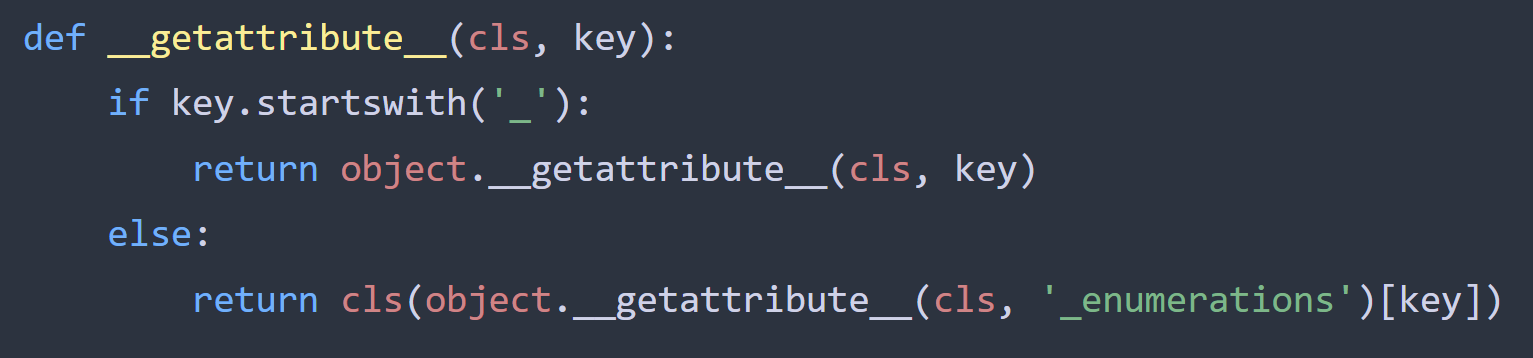

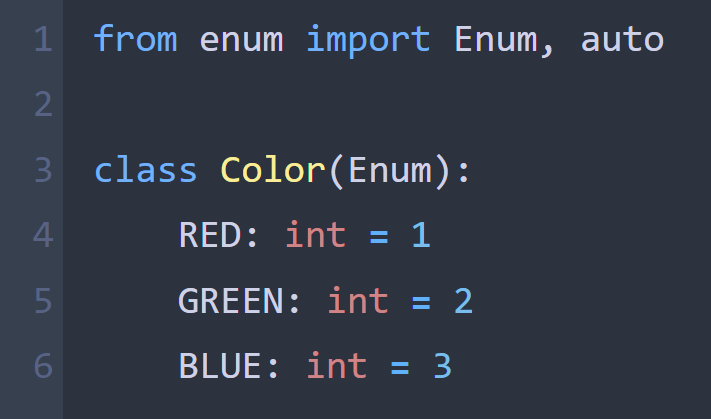

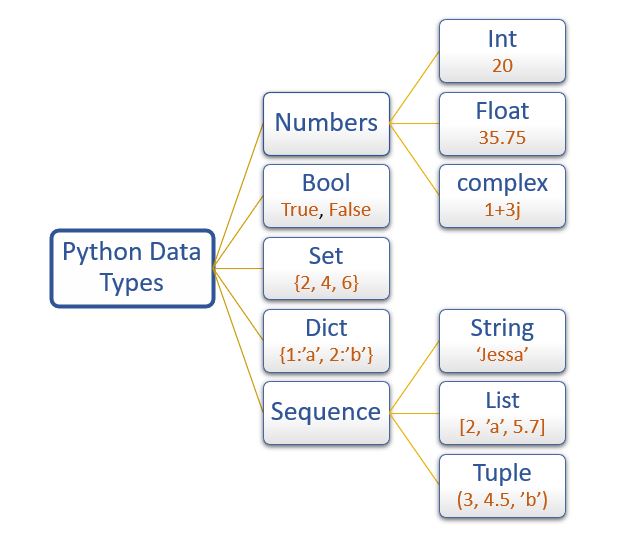

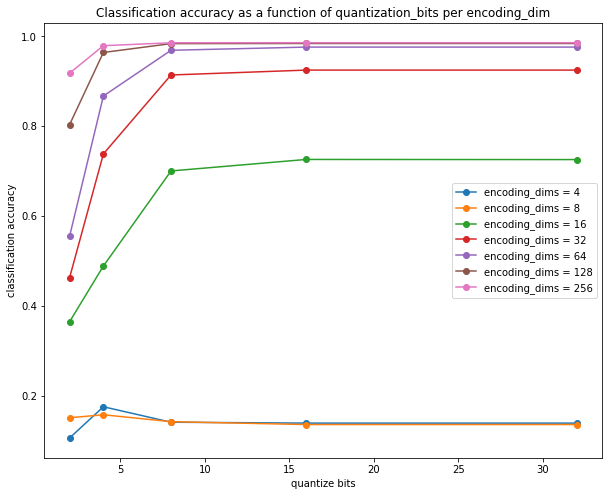

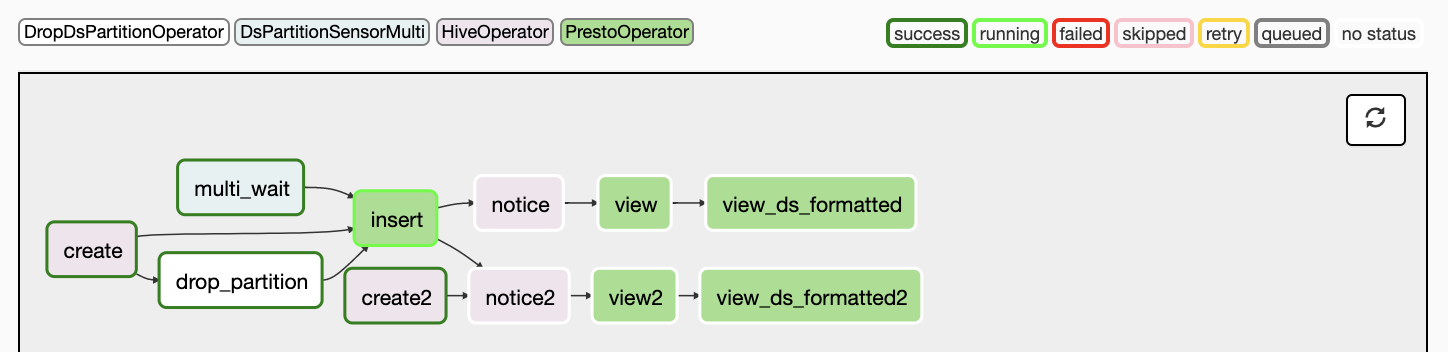

One of the things I learned at Facebook is the power of recommendations. Examples are People You May Know (PYMK), Groups You May Like (GYML) and Pages You May Like (PYML). Inspired by these, I am planning to add an Articles You May Like widget to Bytepawn, based on the semantic similarity of blog posts. I use the Doc2Vec neural network architecture to compute the similarity between my blog posts, and return the top 3 recommendations for each page.